Artificial intelligence and machine learning workloads demand specialized hardware that can handle massive parallel computations efficiently. Enter the Tensor Processing Unit (TPU), Google’s custom-designed chip that’s changing how we approach AI processing. Whether you’re a data scientist, ML engineer, or tech enthusiast, understanding TPUs can help you make better decisions about your AI infrastructure.

What Is a Tensor Processing Unit?

A Tensor Processing Unit (TPU) is an application-specific integrated circuit (ASIC) developed by Google specifically for accelerating machine learning workloads. Unlike general-purpose processors, TPUs are designed from the ground up to perform the matrix multiplication and tensor operations that form the backbone of neural network training and inference.

Google first announced TPUs in 2016, revealing that the chips had already been running in their data centers for over a year, powering services like Google Search, Google Photos, and Google Translate. Since then, TPUs have evolved through multiple generations, each offering significant improvements in performance and efficiency.

How TPUs Work: The Architecture Behind the Speed

TPUs achieve their impressive performance through a specialized architecture optimized for machine learning operations. At the core of a TPU is a matrix multiplication unit (MXU) that can perform thousands of multiply-and-add operations in a single clock cycle.

The key architectural features include:

- Systolic Array Design: TPUs use a systolic array architecture where data flows through an array of processing elements in a rhythmic, pipeline fashion. This design maximizes data reuse and minimizes memory access, which is critical for performance since memory bandwidth often becomes the bottleneck in AI computations.

- High Bandwidth Memory: TPUs incorporate high-bandwidth memory (HBM) that provides the massive memory bandwidth needed to feed data to the processing units fast enough to keep them busy.

- Reduced Precision Computing: TPUs primarily operate on reduced-precision formats like bfloat16 and int8, which provide sufficient accuracy for most neural network operations while allowing more computations per watt and per square millimeter of silicon.

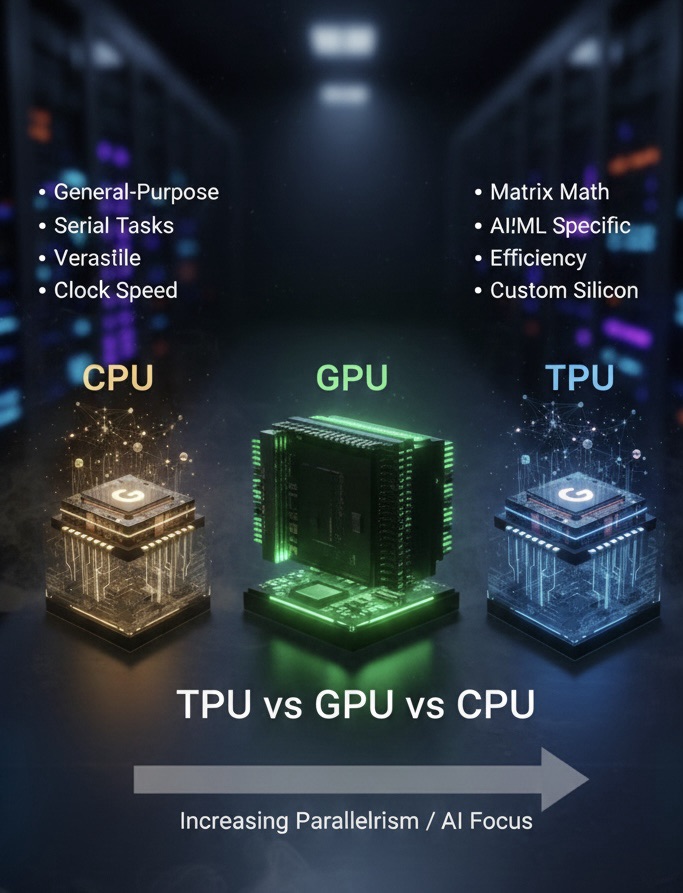

TPU vs GPU vs CPU: Understanding the Differences

Choosing the right processor for AI workloads requires understanding how TPUs compare to more traditional options.

- CPUs (Central Processing Units) are general-purpose processors designed to handle a wide variety of tasks. They excel at sequential processing and complex logic but struggle with the massive parallel computations required by neural networks. For small-scale ML experiments or inference on individual predictions, CPUs may be sufficient and cost-effective.

- GPUs (Graphics Processing Units) were originally designed for rendering graphics but have become popular for machine learning due to their parallel processing capabilities. GPUs offer flexibility and can handle various types of parallel workloads beyond just ML. They’re well-supported by popular frameworks like TensorFlow and PyTorch, making them a versatile choice.

- TPUs are purpose-built exclusively for tensor operations in neural networks. They offer the highest performance per watt for supported ML workloads and can significantly reduce training time for large models. However, they’re less flexible than GPUs and work best with TensorFlow and JAX frameworks.

For training large language models or computer vision systems at scale, TPUs typically provide the best performance and cost-efficiency. For research requiring custom operations or mixed workloads, GPUs might be more suitable.

TPU Generations and Evolution

Google has released multiple generations of TPUs, each bringing substantial improvements:

The first-generation TPU (2016) focused on inference workloads, helping Google’s services respond faster to user queries. TPU v2 (2017) added training capabilities and introduced TPU Pods, clusters of TPUs connected by high-speed interconnects. TPU v3 (2018) doubled the performance again with liquid cooling to handle the increased power density.

More recent generations, including TPU v4 and v5, have continued this trajectory with enhanced performance, improved power efficiency, and better support for emerging ML techniques like sparse models and mixture-of-experts architectures. TPU v5p, announced in 2023, offers nearly three times the training performance of v4 for large language models.

Real-World Applications and Use Cases

TPUs power some of the most demanding AI applications in production today. Google uses TPUs extensively for its own services like the Google Pixels, including training large language models like PaLM and Gemini, processing billions of images in Google Photos, and providing real-time translation across dozens of languages.

Beyond Google, researchers and companies use Cloud TPUs for training state-of-the-art models in natural language processing, computer vision, and recommendation systems. The speed advantage of TPUs becomes particularly valuable when training large transformer models that might otherwise take weeks or months on other hardware.

Scientific research has also benefited from TPU acceleration, with applications ranging from protein folding prediction (AlphaFold) to climate modeling and drug discovery.

Getting Started with TPUs

If you’re interested in leveraging TPUs for your machine learning projects, Google Cloud Platform offers several ways to access them. You can use TPUs through Colab notebooks for experimentation, provision Cloud TPU VMs for more control, or leverage TPU Pods for the largest training jobs.

The primary frameworks for TPU development are TensorFlow and JAX, both of which offer excellent TPU support. PyTorch has also added experimental TPU support through the PyTorch/XLA project. For most users, starting with a free Colab TPU runtime provides an easy way to experiment and understand whether TPUs will benefit your specific workload.

The Future of TPUs and AI Hardware

As AI models continue to grow in size and complexity, specialized hardware like TPUs will play an increasingly important role in making advanced AI capabilities accessible and sustainable. Google continues to invest heavily in TPU development, with each generation bringing innovations in performance, efficiency, and capabilities.

The competition in AI chip design is intensifying, with companies like Amazon (Trainium), Microsoft (Maia), and numerous startups developing their own AI accelerators. This competition drives innovation and will likely lead to even more powerful and efficient AI hardware in the coming years.

For organizations building AI at scale, understanding TPUs and other specialized accelerators is becoming essential. The choice of hardware can dramatically impact not just training speed and inference latency, but also operational costs and environmental footprint.

Frequently Asked Questions About TPUs

What does TPU stand for?

TPU stands for Tensor Processing Unit. It’s a specialized processor designed by Google specifically for accelerating machine learning and artificial intelligence workloads, particularly operations involving tensors (multi-dimensional arrays used in neural networks).

Are TPUs better than GPUs?

TPUs aren’t universally “better” than GPUs, but they excel at specific tasks. TPUs offer superior performance and energy efficiency for training and running large-scale neural networks, especially with TensorFlow and JAX. GPUs provide more flexibility and broader framework support, making them better for research, mixed workloads, or applications requiring custom operations. The best choice depends on your specific use case, budget, and framework preferences.

Can I use TPUs for free?

Yes, Google offers limited free TPU access through Google Colab. Free Colab accounts include periodic access to TPU runtimes for experimentation and learning. For production workloads or guaranteed access, you’ll need to use Google Cloud Platform’s paid TPU services, though they often offer credits for new users.

What frameworks support TPUs?

TensorFlow has the most mature and complete TPU support, as both are developed by Google. JAX, another Google framework, also offers excellent TPU integration. PyTorch has experimental TPU support through the PyTorch/XLA project, though it’s less mature than TensorFlow’s implementation. Most other frameworks don’t currently support TPUs directly.

How much faster are TPUs than GPUs?

The speed difference varies significantly depending on the workload, model architecture, and specific GPU model being compared. For large transformer models and operations TPUs are optimized for, they can be 2-5x faster than comparable GPUs while using less energy. For some workloads, particularly those requiring custom operations or those not optimized for TPU architecture, GPUs may perform equally well or better.

Can I buy a TPU for my personal computer?

No, TPUs are not available for purchase as standalone hardware for personal computers. They’re only accessible through Google Cloud Platform as cloud services. If you need local AI acceleration, consumer GPUs from NVIDIA or AMD are your best option for personal or workstation use.

What is a TPU Pod?

A TPU Pod is a cluster of TPUs connected by Google’s high-speed interconnect network. Pods can scale from tens to thousands of TPU chips working together, enabling training of extremely large models that wouldn’t fit on a single device. TPU Pods represent some of the most powerful AI training infrastructure available today.

Conclusion

Tensor Processing Units represent a significant leap forward in AI hardware, offering unmatched performance for machine learning workloads through purpose-built architecture. While they may not replace GPUs and CPUs entirely, TPUs have carved out a crucial role in the AI ecosystem, particularly for large-scale training and inference.

Whether you’re training the next breakthrough language model or optimizing inference costs for a production ML system, understanding TPUs and how they compare to alternatives will help you make informed infrastructure decisions. As AI continues its rapid advancement, specialized processors like TPUs will remain at the forefront of making powerful AI accessible and practical.

Leave a comment